Safe-Guarding LLM-Synthesised xApps with Formal Verification (Human Centric Pillar)

1 May, 2025

AI-driven applications are increasingly seen as a key enabler for economic development due to their ability to enhance and automate network management, reduce development costs, and democratise access to innovative applications for future networks. One of the main drivers for utilising AI is its effectiveness in dealing with complexity and the rapidly evolving nature of real-world network environments.

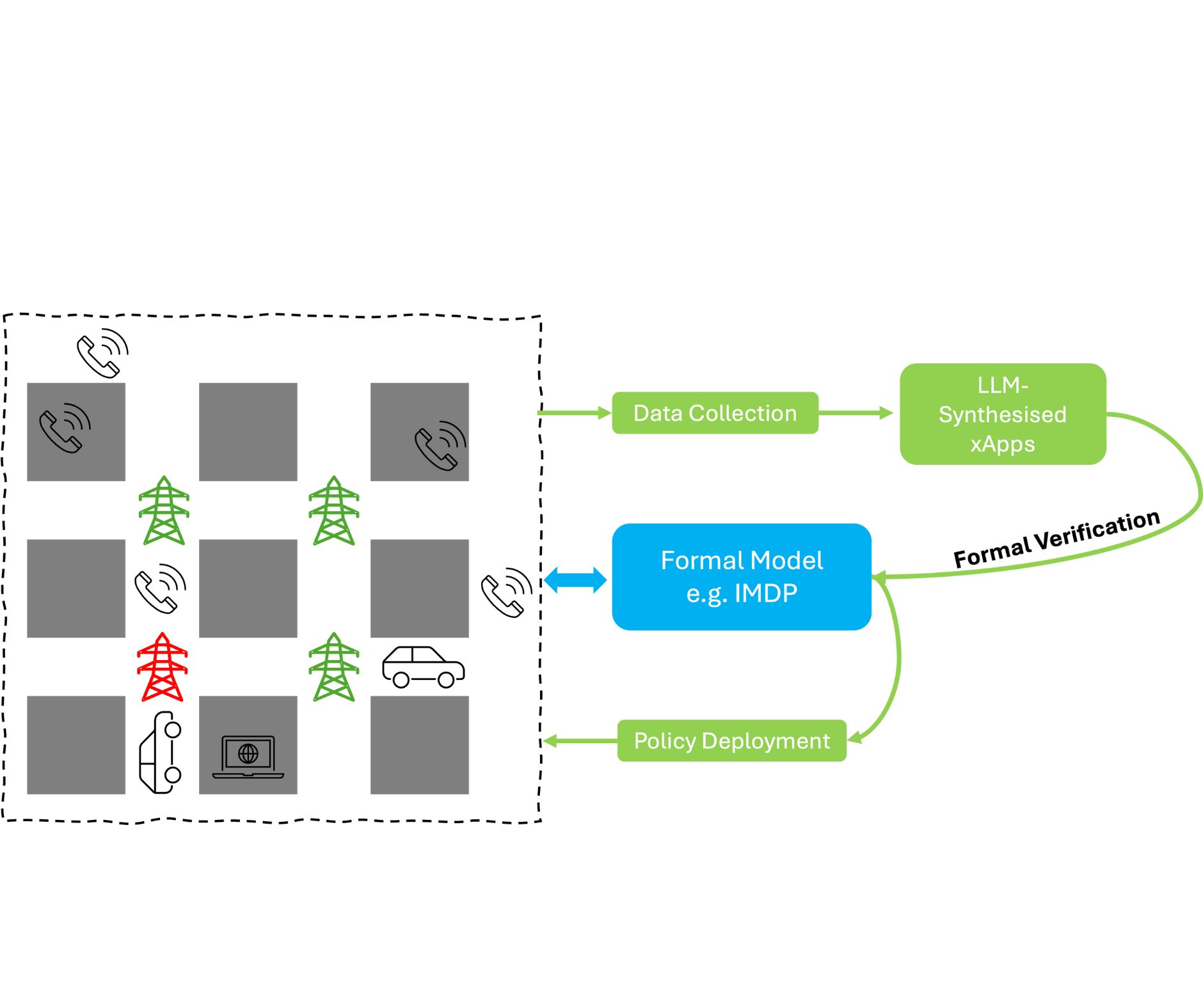

In the context of Open Radio Access Network (O-RAN), Large Language Models (LLMs) can leverage their few-shot properties to synthesise Near-Real-Time Applications (xApps). These xApps can interpret and output management policies with minimal network knowledge hence reducing the need for extensive network analysis. However, there is the risk of introducing bugs and logical inconsistencies, particularly when balancing multiple optimisation objectives. To safeguard LLMs, or AI agents in general, researchers from the University of Glasgow employ Formal Methods, a set of rigorous mathematical techniques for the specification, development, and verification of systems, to validate the output policy before they are deployed in production. This ensures system correctness and reliability. By offering precise mathematical models of system behaviour, Formal Methods can help network engineers analyse uncommon edge cases that might be missed by traditional testing techniques based on simulation.

The Glasgow group has demonstrated this approach in a power management scenario where an LLM determines the status of base stations by interpreting the current user distribution in the network. Then, the LLM outputs are validated against network performance using pre-built Formal Models based on Interval Markov Decision Processes. The loop is finally closed by determining through Formal Verification whether the LLM outputs need to be regenerated and guiding the refinement of the LLM for the next generation, hence minimising the danger of the LLM hallucinating. This novel safeguarding approach is essential to guarantee correct operativity, resilience against cybersecurity threats, cost efficiency and sustainability, particularly in sensitive and mission-critical applications like network deployments in healthcare, military, law enforcement, and autonomous vehicles.